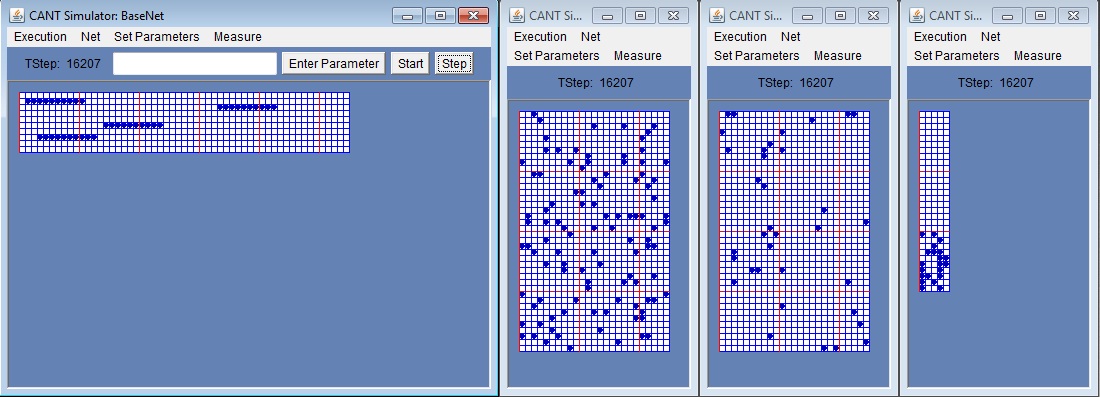

Old Categorisation Results

- So, we played around with some topologies and came up with

a four net topology.

- The input (base) subnet represents the input vectors, and the output

net on the right represents the categories.

- Connections are: input->som->hidden->output; som, hidden and output are

all self connected; output->hidden-> som.

- The learning is post-compensatory from input and all the others are

pre-compensatory.

- Here's part of a neural simulation that learns irises. It

got about 93%, which is a reasonable score on this task.

- The picture shows the net during training.

- Blue circles are firing neurons, and the white squares are

neurons that don't fire in this cycle.

- One instance of an iris is presented in the base net, and

its told its category three (both by externally activating

those neurons).

- The firing in the middle two subnets is via spread of activation

emerging from learning.

- This is old work, and similar nets were used to categorise

yeast (52%), and other tasks.

- It's pretty general.

- What we'd like is the correct category neurons firing

for an input.